-

3D Recurrent Neural Networks with Context Fusion for Point Cloud Semantic Segmentation

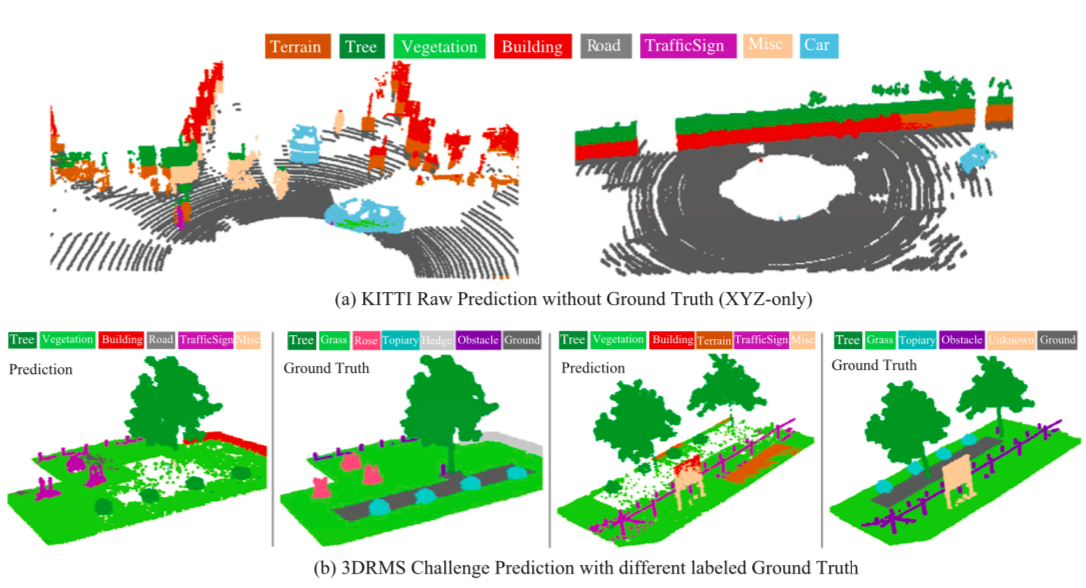

Semantic segmentation of 3D unstructured point clouds remains an open research problem. Recent works predict semantic labels of 3D points by virtue of neural networks but take limited context knowledge into consideration. In this paper, a novel end-to-end approach for unstructured point cloud semantic segmentation, named 3P-RNN, is proposed to exploit the inherent contextual features. First the efficient point- wise pyramid pooling module is investigated to capture local structures at various densities by taking multi-scale neighborhood into account. Then the two-direction hierarchical recurrent neural networks (RNNs) are utilized to explore long-range spatial dependencies. Each recurrent layer takes as input the local features derived from unrolled cells and sweeps the 3D space along two directions successively to integrate structure knowledge. On challenging indoor and outdoor 3D datasets, the proposed framework demonstrates robust performance superior to state-of-the-arts.

-

Robust Stereo Visual Odometry Using Improved RANSAC-based Methods for Mobile Robot Localization

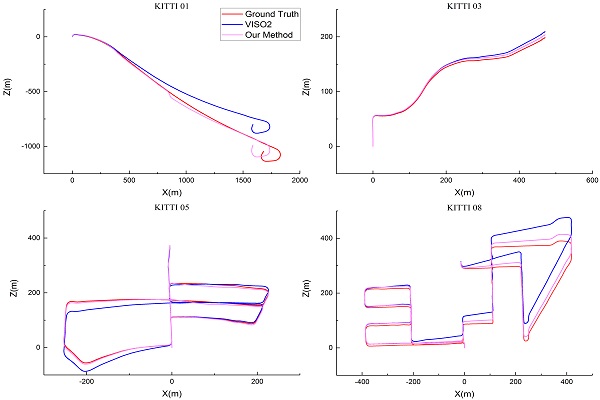

In this paper, we present a novel approach for stereo visual odometry with robust motion estimation which is faster and more accurate than standard RANSAC. Our method makes improvements in RANSAC in three aspects: first, the hypotheses are preferentially generated by sampling the input feature points in the order of ages and similarities of the features; second, the evaluation of hypotheses is performed based on Sequential Probability Ratio Test that makes bad hypotheses discarded very fast without verifying all the data points; third, we aggregate the three best hypotheses to get the final estimation instead of only selecting the best hypothesis. The first two aspects improve the speed of RANSAC by generating good hypotheses and discarding bad hypotheses in advance, respectively.

-

Order-Based Disparity Refinement Including Occlusion Handling for Stereo Matching

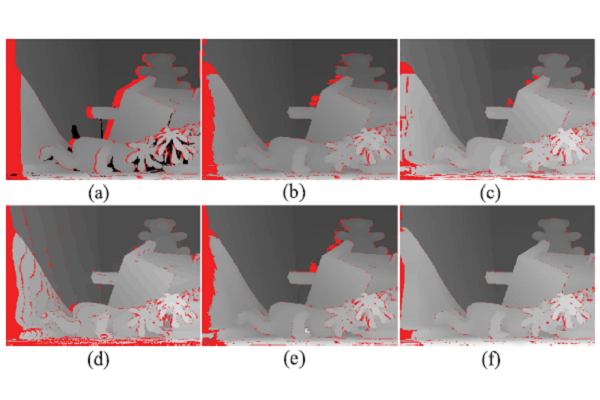

Accurate stereo matching is still challenging in case of weakly textured areas, discontinuities, and occlusions. Besides, occlusion recovery is often regarded as a subordinate problem and simply handled. To obtain dense high-accuracy depth maps, this letter proposes an efficient multistep disparity refinement framework with occlusion handling. The framework is implemented by classifying the outliers into leftmost occlusions, non-border occlusions, as well as mismatches, and employing different strategies to recover them. To recover occlusions, a filling order is specially introduced to avoid error propagation and surface decision based on local image content is performed when more than one background surface exists. The evaluations on Middlebury datasets and comparisons with other refinement algorithms show the superiority and robustness of our method.

-

Ground Plane Detection with a New Local Disparity Texture Descriptor

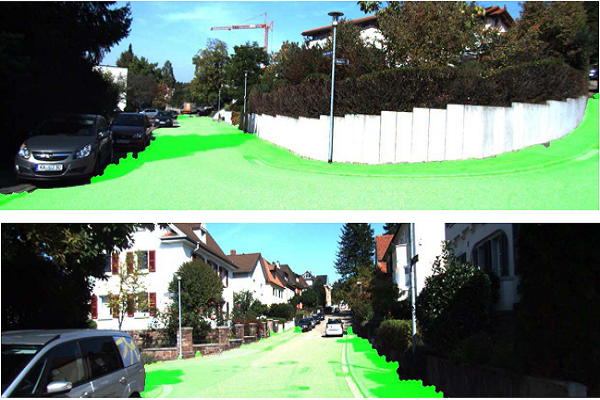

In this paper, a novel approach is proposed for stereo vision-based ground plane detection at superpixel-level, which is implemented by employing a Disparity Texture Map in a convolution neural network architecture. In particular, the Disparity Texture Map is calculated with a new Local Disparity Texture Descriptor (LDTD). The experimental results demonstrate our superior performance in KITTI dataset.

-

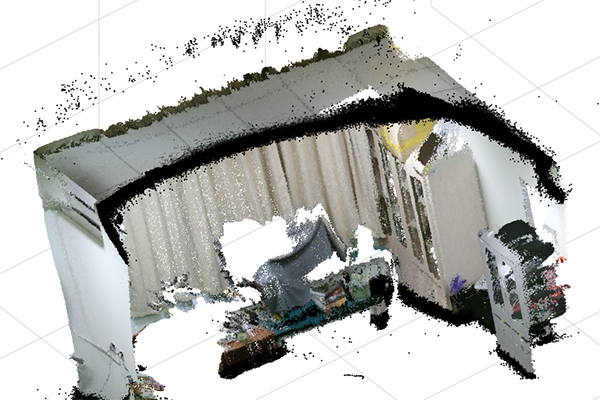

Effective Indoor Localization and 3D Point Registration Based on Plane Matching Initialization

Effective indoor localization is the essential part of VR(Virtual Reality) and AR (Augmented Reality) technologies. Tracking the RGB-D camera becomes more popular since it can capture the relatively accurate color and depth information at the same time. With the recovered colorful point cloud, the traditional ICP (Iterative Closest Point) algorithm can be used to estimate the camera poses and reconstruct the scene. However, many works focus on improving ICP for processing the general scene and ignore the practical significance of effective initialization under the specific conditions, such as the indoor scene for VR or AR.